The skill of managing luck

April 22, 2018

Imagine I offered you a way to instantly improve your decision making. Would you take it?

Most wouldn’t. That’s because of how it improves hit rates. It doesn’t do it by winning against all odds. It doesn’t notice the detail no-one else noticed. It doesn’t make high-confidence, narrative based claims.

We humans seem to be attracted to a bad process. To the unexpected, the unusual, the low probability. We’re attracted to luck - the more extreme the luck, the better.

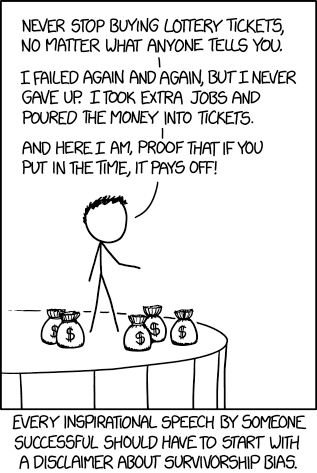

We seem to especially relish luck masquerading as skill.

We avoid the miniscule probability mortality risks (sharks, snakes, plane crashes) because they’re rare. Stories about them stick out, creating dreaded risks. We accept the high probability mortality risks (car crashes, smoking, bad diet) because they’re boring. Because they’re common. As Michael Mauboussin says:

The basic challenge is that we love stories and have a yearning to understand the relationship between cause and effect. As a result, statistical reasoning is hard, and we start the view the past as something that was inevitable.

Mauboussin’s book is a wonderful exploration of luck and skill. It’s particularly pointed on when to attribute an individual’s outcome to skill versus luck:

When skill plays a role in determining what happens, you can rely on specific evidence. In activities where luck is more important, the base rate should guide your prediction.

I’d like to slightly adapt this to think about our attraction to skill, and our aversion to things that deal with ‘luck’. Mauboussin defines luck as something where it is reasonable to assume that another outcome was possible, and better or worse. We naturally focus on narratives. We put weight on a few pieces of unusual evidence, rather than include and weigh many different pieces. We ignore base rates and how similar one decision is to another… and put huge weight on minor ways in which they are different.

Skill is boring. Skill is 10,000 hours of practice. Skills is consistency. Skill is a game of inches. Skill is picking battles to win wars. Skill is incremental. In contrast, we love upsets and underdogs. The little guy, with less practice, planning, and years of experience wins anyway… because they have heart, or hope, or something.

So, for my magic spell for improving decision making: algorithms.

Or, using the phrasing above: the skill of managing luck.

Let me call any systematic process based a data-calibrated model an algorithm. Algorithms are often much better at many decision tasks than humans. Yet, research shows we’re more likely to choose a human forecaster than an algorithm. And it’s ever worse when we see how an algorithm performs, especially if it occasionally makes a wrong call. This is called algorithm aversion.

Why are we averse to algorithms? Berkeley Dietvorst, Cade Massey and Joseph Simmons have been looking into just that. And Berkeley explained to me “We benchmark algorithms to perfect, and humans to mediocre.” If we see an 85% hit rate for an algorithm, we worry about the 15%. When we see a 60% hit rate for a human, we say “that’s better than chance!”. Some respondents in the study said they think people can learn and improve over time, and (implicitly) algorithms can’t. Most of us understand humans, and probably don’t have a good understanding of how algorithms work. So we avoid the unknown.

I have an additional idea about why we’re averse to algorithms. Algorithms are better at the grey, boring part of prediction. They don’t go for upsets, or underdogs, or black swans. They make their margin thriving in the grey. They know how adjust for the difference between 55% and 65%. They consider 30 pieces of information, and base rates, adjusted accordingly, and still express uncertainty at the outcome.

They epitomize the skill of managing luck: they’re never certain, but constantly improving the odds. That’s boring! It’s not memorable or exciting. You don’t root for an algorithm. They never surprise you on the upside. They’re never a hero, defying the odds. They don’t ‘try hard’. They don’t offer a narrative. Algorithms are the odds-makers. They’re the grind.

They’re the system, man.

How can we learn to work alongside algorithms?

But since the potential upside is so big, it’s worth considering how we can get comfortable and confident working with algorithms. Here are a few ideas.

Encourage tweaking: Algorithms, by teasing apart statistical patterns, are going to be better at establishing a better base-rate prediction. But algorithms rely on data to be calibrated. That means they have a bias towards expecting history, or a baseline set of data gathered by convenience. Data-based algorithms are often too specific - they are optimized for the data on which they were trained. This usually means the predictions should be even less confident out of sample than we give them credit for.

Luckily, exactly this type of tweaking may be exactly what we need psychologically to get comfortable with using algorithms. Dietvost, Simmons and Massey found that by allowing people to even slightly modify the algorithms output, confidence and preference for those forecasts were greatly increased. This is true even when the magnitude of the modification is fairly small. Which is lucky, because we make the worst forecasts when we make large adjustments to base-rate predictions. Tweaking leverages the strength of algorithms (better conditional base-rate predictions) and humans (including out-of-sample or un-modelled factors).

Found in Translation The output of algorithms can sometimes be unexpected or unintuitive. This makes sense: AI/ML techniques aren’t meant to replicate human reasoning - they’re meant to complement it. Which means we must expect and accept some ‘weird’ outputs and explanations. As one online commenter quite aptly put it: “if interpretability is a relatively large concern then you probably shouldn’t be using a neural network in the first place.”

We are now creating AI/ML translators that try to communicate why the output is what it is. Because the truth is more complicated than we really want to know, it is always a gross simplification. But these translations are presented in ways which we’d understand: this predictor was given the most weight, etc. Our desire to put a prediction output in an intuitive format means we may mis-impose our own heuristics upon the actual model - in effect using cognitive biases to interpret machine learning. For those that have the patience and openness, that ‘conversation’ about two different ways of thinking, and struggling to find a middle ground, is likely to be fertile territory where we learn more about how we can improve our thinking.

What might this look like in practice?

I once heard an investor joke that “I try pick the managers who will be lucky in the future”. If we get better at using algorithms to augment our judgement, that may be exactly how it feels. For both parties! The machines will bring their skills at managing luck, and we will bring our attraction to seeing how the specific is different from the average. And both we and the machines will be surprised at how ‘lucky’ we are when we work together.